Airflow tutorial 3: Set up airflow environment using Google Cloud Composer

We will learn how to set up airflow environment using Google Cloud Composer

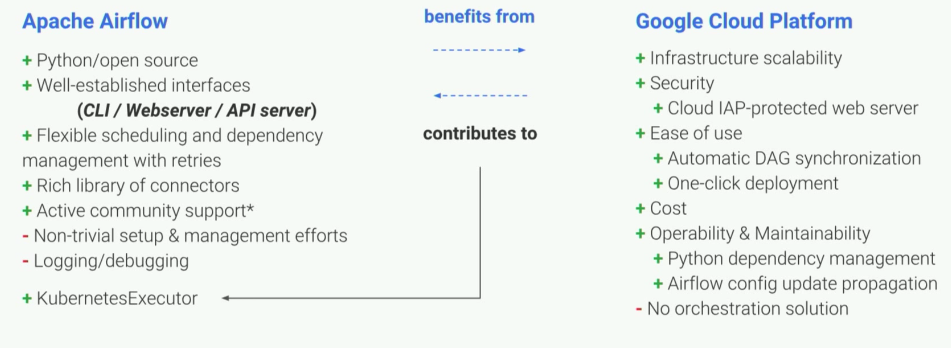

Overview of Cloud Composer

- A fully managed Apache Airflow to make workflow creation and management easy, powerful, and consistent.

- Cloud Composer helps you create Airflow environments quickly and easily, so you can focus on your workflows and not your infrastructure.

Hosting Airflow on-premise

Let’s say you want to host Airflow on-premise. In another word, you host Airflow on your local server. There are a lot of problems with this approach:

- You will need to spend a lot of time doing DevOps work: create a new server, manage Airflow installation, takes care of dependency management, package management, make sure your server always up and running, then you have to deal with scaling and security issues…

- If you don’t want to deal with all of those DevOps problem, and instead just want to focus on your workflow, then Google Cloud composer is a great solution for you.

Google Cloud Composer benefit

- The nice thing about Google Cloud Composer is that you as a Data Engineer or Data Scientist don’t have to spend that much time on DevOps.

- You just focus on your workflows (writing code), and let Composer manage the infrastructure.

- Of course you have to pay for the hosting service, but the cost is low compare to if you have to host a production airflow server on your own. This is an ideal solution if you are a startup in need of Airflow and you don’t have a lot of DevOps folks in-house.

Key Cloud Composer features

- Simplicity:

- One-click to create a new Airflow environment

- Client tooling including Google Cloud SDK, Google Developer Console

- Easy and controlled access to the Airflow Web UI

- Security:

- Identity access management (IAM): manage credentials, permissions, and access policies.

- Scalability:

- Easy to scale with Google infrastructure.

- Production monitoring:

- Stackdriver logging and monitoring:

- Provide logging and monitoring metrics, and alert when your workflow is not running.

- Simplified DAG (workflow) management

- Python package management

- Stackdriver logging and monitoring:

- Comprehensive GCP integration:

- Integrate with all of Google Cloud services: Big Data, Machine Learning…

- Run jobs elsewhere: Other cloud provider, or on-premises.

Releases

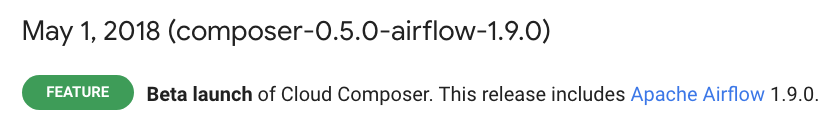

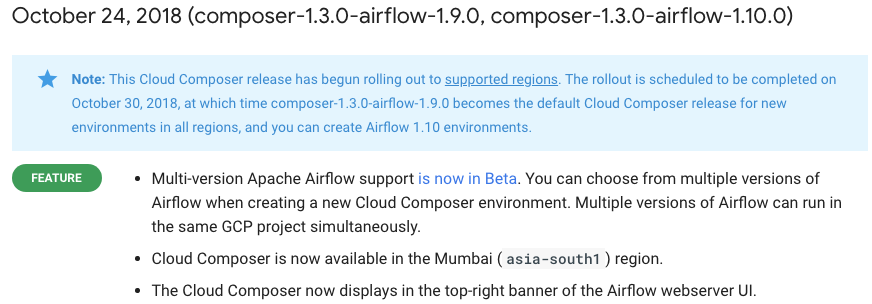

Google Cloud composer is a new product from Google. With the latest push from Google, you can be sure that Apache Airflow is the current cutting edge technology in the software industry.

- First beta release: May 1, 2018 (6 months ago)

- Latest release: October 24, 2018

- Support Python 3 and Airflow 1.10.0

Set up Google Cloud Composer environment

- It’s extremely easy to set up. If you have a Google Cloud account, it’s really just a few clicks away.

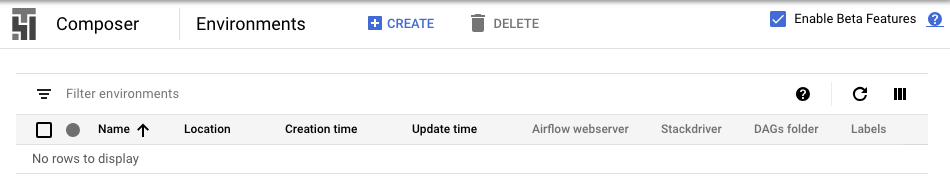

Composer environment

- You can create multiple environments within a project.

- Each environment is a different kubernetes cluster with multiple nodes, so they are perfectly isolated from each other.

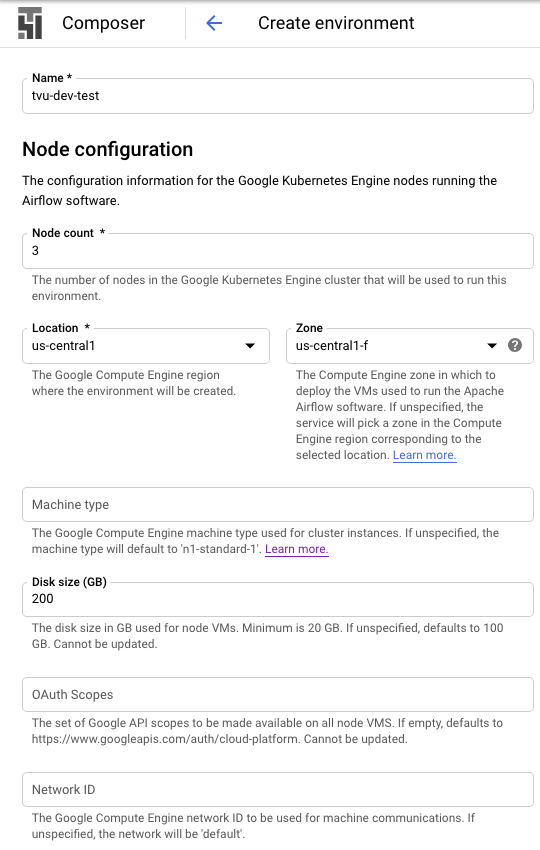

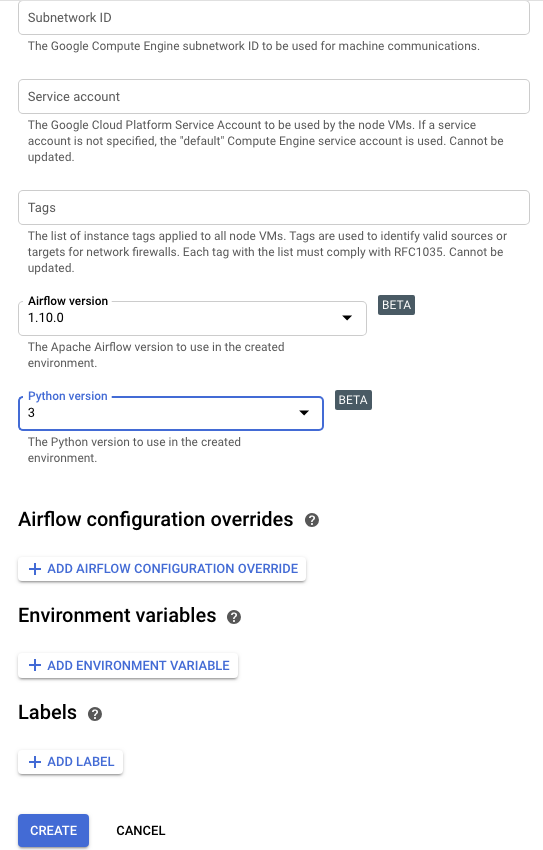

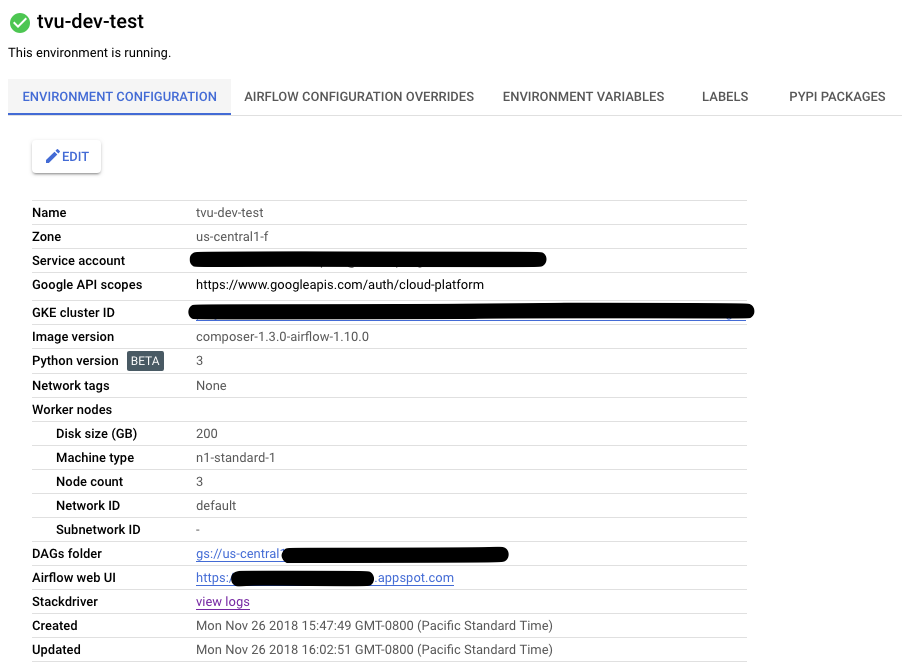

Create an environment

- Choose how many nodes and disk size

- Choose Airflow and Python version

A complete Composer environment

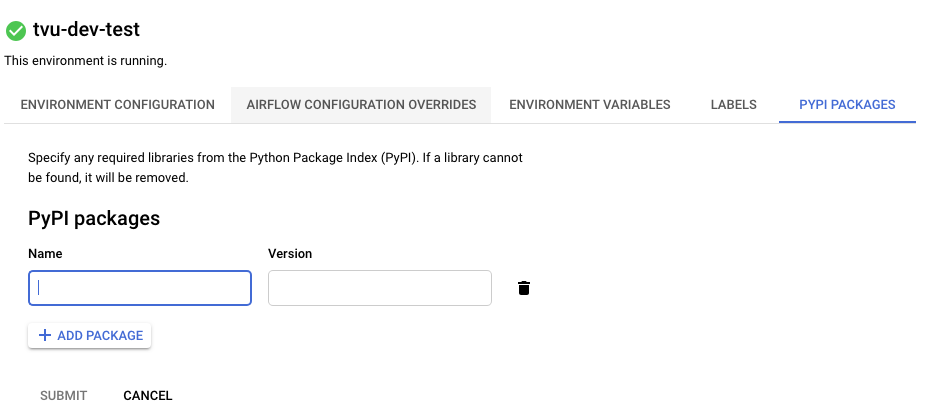

Installing Python dependencies

- Installing a Python dependency from Python Package Index (PyPI)

Deployment

Deployment is simple. Google Cloud Composer uses Cloud Storage to store Apache Airflow DAGs, so you can easily add, update, and delete a DAG from your environment.

- Manual deployment:

- You can drag-and-drop your Python

.pyfile for the DAG to the Composer environment’sdagsfolder in Cloud Storage to deploy new DAGs. Within seconds the DAG appears in the Airflow UI. - Using gcloud sdk command to deploy a new dag.

- You can drag-and-drop your Python

- Auto deployment:

- Your DAGs files are stored in a Git repository. You can set up a continuous integration pipeline to automatically deploy every time a merge request is done in the master branch.

More information

- Cloud Composer official documentation

- Watch full talk from Google: Live demo of getting a worfklow up and running in Google Cloud Composer.

Leave a comment